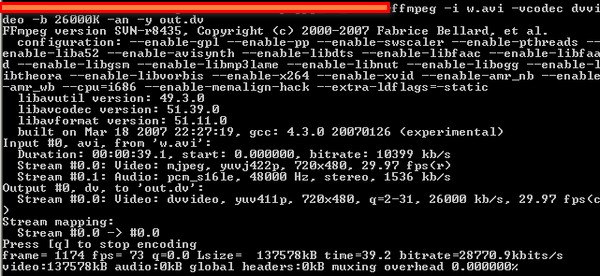

The client should select an Encoding GUID that represents the desired codec for encoding the video sequence in the following manner: Use of the OpenGL device type for encoding is supported only on Linux. NV_ENC_OPEN_ENCODE_SESSION_EX_PARAMS::device must be NULL and NV_ENC_OPEN_ENCODE_SESSION_EX_PARAMS::deviceType must be set to NV_ENC_DEVICE_TYPE_OPENGL.

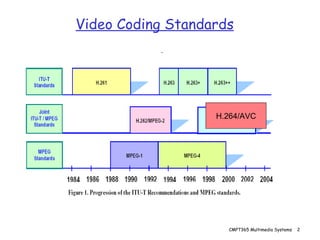

ADVANCED VIDEO CODEC FRAME COUNT WINDOWS 7

Use of CUDA device for Encoding is supported on Linux and Windows 7 and later versions of Windows OS. The client should create a floating CUDA context, and pass the CUDA context handle as NV_ENC_OPEN_ENCODE_SESSION_EX_PARAMS::device, and set NV_ENC_OPEN_ENCODE_SESSION_EX_PARAMS::deviceType to NV_ENC_DEVICE_TYPE_CUDA.

ADVANCED VIDEO CODEC FRAME COUNT WINDOWS 10

Use of DirectX 12 devices is supported only on Windows 10 20H1 and later versions of Windows OS.

The client should pass a pointer to IUnknown interface of the created device (typecast to void *) as NV_ENC_OPEN_ENCODE_SESSION_EX_PARAMS::device, and set NV_ENC_OPEN_ENCODE_SESSION_EX_PARAMS::deviceType to NV_ENC_DEVICE_TYPE_DIRECTX.Use of DirectX devices is supported only on Windows 7 and later versions of Windows OS. Use of DirectX devices is supported only on Windows 7 and later versions of the Windows OS. The client should create a DirectX 9 device with behavior flags including : D3DCREATE_FPU_PRESERVE, D3DCREATE_MULTITHREADED and D3DCREATE_HARDWARE_VERTEXPROCESSING.The NVIDIA Encoder supports use of the following types of devices: This function returns an encode session handle which must be used for all subsequent calls to the API functions in the current session. This populates the input/output buffer passed to NvEncodeAPICreateInstance with pointers to functions which implement the functionality provided in the interface.Īfter loading the NVENC Interface, the client should first call NvEncOpenEncodeSessionEx to open an encoding session. These steps are explained in the rest of the document and demonstrated in the sample application included in the Video Codec SDK package.Īfter loading the DLL or shared object library, the client's first interaction with the API is to call NvEncodeAPICreateInstance. Clean-up - release all allocated input/output buffers.This can be done synchronously (Windows & Linux) or asynchronously (Windows 7 and above only). Copy frames to input buffers and read bitstream from the output buffers.Broadly, the encoding flow consists of the following steps: NVENCODE API is designed to accept raw video frames (in YUV or RGB format) and output the H.264, HEVC or AV1 bitstream. Rest of this document focuses on the C-API exposed in nvEncodeAPI.h. For programmers preferring more high-level API with ready-to-use code, SDK includes sample C++ classes expose important API functions. NVENCODE API is a C-API, and uses a design pattern like C++ interfaces, wherein the application creates an instance of the API and retrieves a function pointer table to further interact with the encoder. The NVENCODE API functions, structures and other parameters are exposed in nvEncodeAPI.h, which is included in the SDK.

The client application can either link to these libraries at run-time using LoadLibrary() on Windows or dlopen() on Linux. These libraries are installed as part of the NVIDIA display driver. Developers can create a client application that calls NVENCODE API functions exposed by nvEncodeAPI.dll for Windows or libnvidia-encode.so for Linux.

0 kommentar(er)

0 kommentar(er)